With the introduction of the EU AI Act, Europe enters a new era of digital regulation, setting global standards for the design of artificial intelligence (AI), akin to what was once done with the General Data Protection Regulation (GDPR). This pioneering work aims to both promote the innovation and development of AI technologies and simultaneously ensure ethical standards and the protection of fundamental rights. For businesses, the Act marks a turning point: it not only defines new compliance challenges but also paves the way for a trustworthy and sustainable integration of AI into the business world. The societal impact is enormous, as the Act aims to strengthen citizens' trust in AI while simultaneously minimizing risks associated with the deployment of this powerful technology.

Overview of the EU AI Act

The EU AI Act represents an ambitious initiative by the European Union to create a harmonized legal framework for the development and application of artificial intelligence (AI).

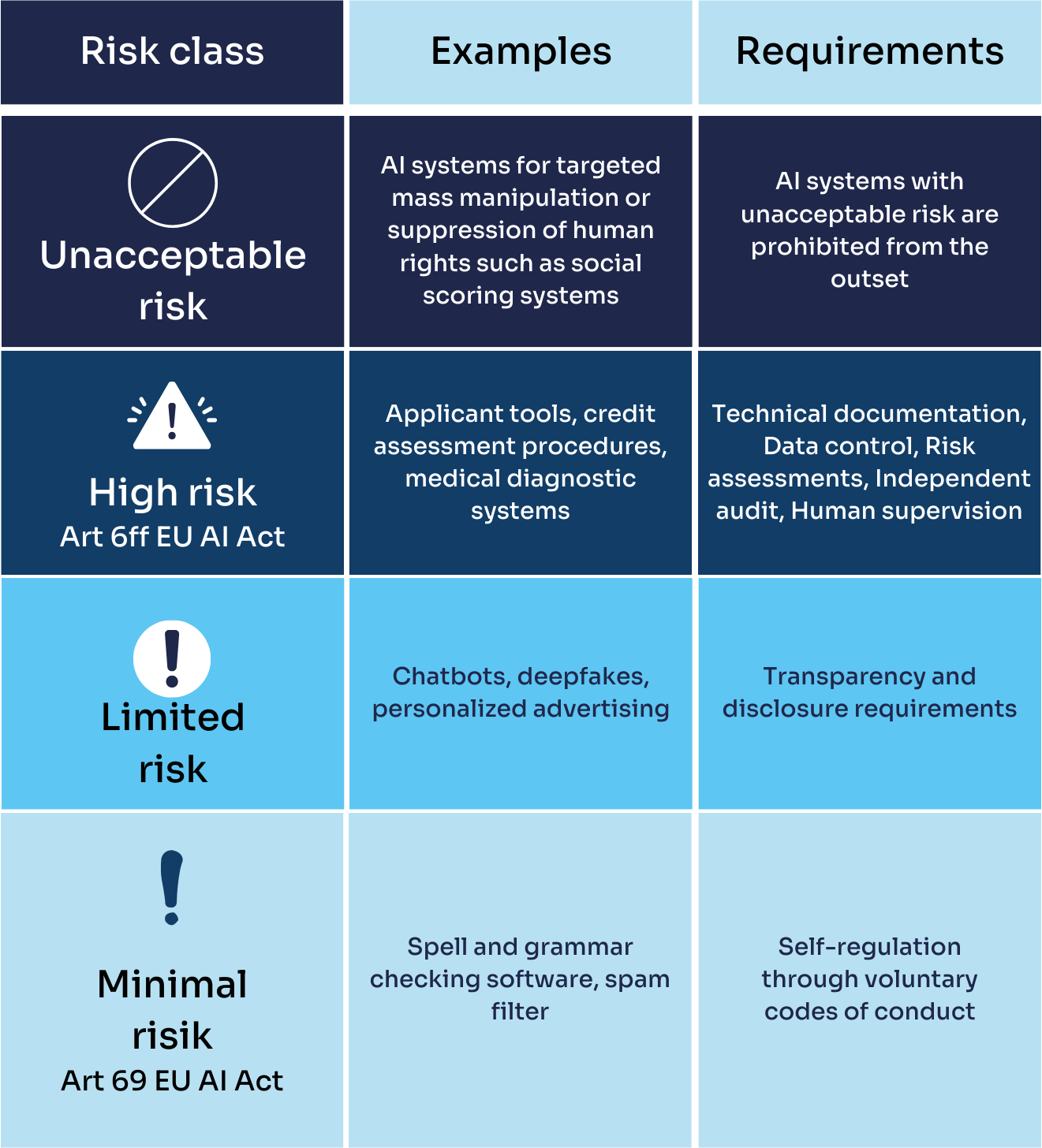

The core of this regulation is the classification of AI systems according to their risk potential, ranging from "minimal" to "limited", "high", and "unacceptable". The goal is to find a balance between promoting technological innovations and protecting citizens' fundamental rights and safety.

AI systems with unacceptable risks, such as those that could lead to mass surveillance or systematic evaluation of social behavior, are categorically banned. High-risk AI systems, such as those used in critical infrastructures or for assessing creditworthiness, are subject to strict transparency and monitoring requirements. They must undergo a risk assessment and document their compliance with high security and data protection standards. For AI systems with limited or minimal risk, the requirements are less stringent, yet emphasis is placed on transparency and information obligations to strengthen trust in these technologies.

Another key aspect of the AI Act is the conformity assessment procedure, which is intended to ensure that AI systems meet regulatory requirements before their launch. This underscores the need for developers and providers of AI to carefully review their systems and demonstrate compliance with legal provisions.

In summary, the EU AI Act offers a comprehensive legal framework aimed at steering the development and use of AI in a manner that enables innovative advancements while ensuring ethical standards and the protection of fundamental rights.

Implications for Businesses

The introduction of the EU AI Act brings significant challenges and obligations for businesses, especially small and medium-sized enterprises (SMEs), due to the need to comply with strict guidelines on classification, risk management, transparency, and monitoring of AI systems. These regulatory requirements could pose a considerable burden, especially for SMEs that may lack the necessary resources for implementation.

Obligations and Challenges

Classifying an AI system as high-risk means that companies must meet comprehensive requirements, including establishing an effective risk management system, ensuring data quality, implementing human oversight measures, and ensuring the accuracy, robustness, and cybersecurity of the systems. These requirements could be particularly challenging for SMEs, as the development and implementation of such systems require significant investments in time, expertise, and financial resources.

Compliance Requirements

The compliance requirements include not just the technical aspects of the AI systems themselves, but also extensive documentation and reporting duties. Companies must maintain detailed records of the development, operation, and evaluations of their AI systems and make this information available to regulatory authorities upon request. These regulations aim to create transparency and increase companies' accountability, yet they pose an additional administrative burden, especially for SMEs that may lack the capacity to fulfill these requirements efficiently.

At this juncture, PCG can act as your strategic and operational partner to guide you through this complex process. Our offerings include specialized consulting services to establish your risk management system, tailored to the specific requirements of the EU AI Act. With a deep understanding of the necessary data quality standards, we support you in implementing robust data management practices. Furthermore, we offer training on human oversight of AI systems, ensuring that your teams possess the necessary expertise to ensure compliance while fully leveraging the benefits of AI.

Impact on Innovation

While the regulation aims to minimize risks and strengthen trust in AI technologies, there is concern that the stringent regulations could also negatively impact innovation capacity. The necessity to comply with extensive compliance procedures could pose a barrier especially for startups and SMEs at the forefront of innovation. There is a risk that regulation could slow the development of new technologies and affect the competitiveness of European companies in the global market. These concerns are also reflected in statements from the business community, warning about the potential impacts of the AI Act on competitiveness and technological sovereignty in Europe.

To address these challenges, it is important for the regulation to follow a balanced approach that considers both the risks and the potentials of AI. This could be achieved through flexible regulatory mechanisms that promote innovation while ensuring robust protection of fundamental rights and safety. Close collaboration between regulatory authorities, industry, and science could also help develop viable solutions that meet the needs of all stakeholders. In this context, PCG is ready to act as your partner, navigating together through the complexities of the EU AI Act. With our expertise in adapting to EU regulations, we support you not only in complying with the new rules but also in working to enhance and secure your company's innovation capability.

Critical Perspectives and Challenges

While many view the EU AI Act as a crucial step towards ensuring ethical and safe AI applications, there are also criticisms and challenges associated with its implementation.

Key Criticisms

A major concern is the potential inhibition of innovation. Critics fear that the strict regulations of the law, especially for high-risk AI systems, could slow down the development of new technologies. These concerns are primarily directed at the bureaucratic barriers and the comprehensive compliance requirements that could pose significant challenges for smaller companies and startups. It is argued that the associated costs and administrative effort could slow down rather than foster innovation.

Additionally, there's a concern that the EU AI Act could weaken the international competitiveness of European companies. Since the regulations mainly apply within the EU, non-European companies operating under less stringent regulations could have an advantage in the global market.

International Coordination and Conflicts

Another discussion point is the need for international coordination in AI regulation. The EU AI Act sets standards that could have worldwide implications, as many companies operate globally. Differences in regulatory approaches between the EU and other regions like the USA or China could bring potential conflicts. Uniform global standards, or at least closer coordination between the largest economic areas, could help minimize such conflicts. The need for such coordination is intensified by the risk that different regulatory requirements could lead to fragmentation of the global market. This could complicate the introduction of cross-border AI services and slow down global innovation dynamics.

A specific problem in this context is the potential effect of "Regulatory Arbitrage," where companies choose locations for their research and development activities in countries with lower regulatory requirements. This could not only weaken the competitiveness of the EU but also lead to a "Race to the Bottom" regarding ethical standards and the protection of citizens' rights.

The EU AI Act's approach to fostering innovation while ensuring ethical compliance and the protection of fundamental rights presents a nuanced challenge. Balancing these elements is crucial for the Act's success and its acceptance by the global business community. Collaborative efforts towards establishing shared standards and regulatory frameworks can mitigate the risk of fragmentation, promoting a cohesive advancement in AI that benefits all stakeholders while safeguarding ethical and safety standards.

Opportunities and Positive Aspects

The EU AI Act, despite the challenges and criticisms it faces, also presents significant opportunities and positive aspects. One of the main benefits of clear regulation is the establishment and strengthening of trust in AI technologies. By mandating transparent guidelines and strict safety standards, the Act ensures that AI applications respect users' rights and guarantee their safety. This trust is crucial for the acceptance and integration of AI across various sectors of life.

Furthermore, the EU AI Act plays a pivotal role in promoting ethical AI development. By creating a framework that prioritizes ethical principles such as fairness, transparency, and accountability, the Act encourages companies to integrate these principles into the development process of their AI systems from the outset. This ethical orientation helps to mitigate potential negative impacts of AI, such as discrimination or privacy violations, while maximizing positive contributions to society.

By bolstering trust in AI and promoting ethical standards, the EU AI Act lays a solid foundation for sustainable and responsible use of AI technologies. This opens up the opportunity to fully realize AI's potential while simultaneously protecting and advancing human rights.

Conclusion

While the EU AI Act represents a fundamental step towards a regulated AI future, continuous adaptation of the law to technological advancements will be essential. Furthermore, fostering international cooperation will be critical in establishing global standards and thus preventing fragmented technological progress.

The EU AI Act signifies an important turning point in shaping the digital future, yet it poses significant challenges for businesses. Although the regulation has the potential to foster trust and ethical development, it may also slow down innovation. Thus, achieving a subtle yet crucial balance in AI regulation, which provides protection while not hindering innovations, remains a pivotal task.